Primary Goals

Sound Design

I will write sound design breakdowns for at least 3 – 4 projects (initially I am thinking for Horse, Bullet, Lantern and Islands), if not all of them. This includes lists of sound effects required, brief documents discussing the choices made in the sound design and the intended impact of sound and music on the player.

Sound Programming

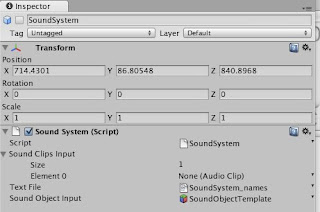

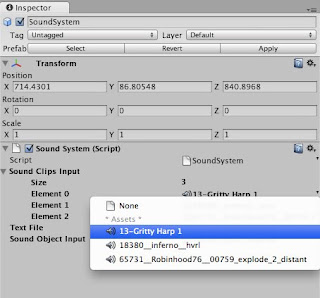

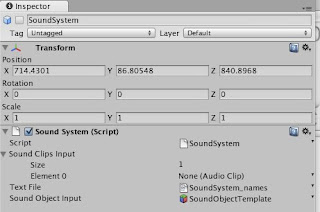

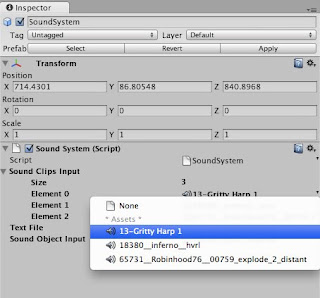

I will build and maintain a sound system for use in Unity projects to streamline the inclusion of sound in the game. This is dependent on the nature of each game, whether the sound needs to be simulated in 3D space or not, and the needs of the separate teams.

I am still unclear as to the exact nature of this system. I am hoping that over the next week with some more feedback I will be able to define the problem better and start building a solution.

Music Composition/Effects Production

I will be composing most, if not all, of the music for Horse. This will include different tunes with various arrangements and styles for different stages of the game. While initially the music should have an 8-bit feel to match the retro look of the game, it should change frequently and drastically, in accordance with the subversive nature of the game design.

I have also been asked to compose the music for Bullet. The current music direction suggested is something on the lines of 'epic strings'.

I am keen to compose music for Islands, as this would allow me to explore a slightly different realm of composition – rather than music that is based specifically on the visuals or the theme of the game, Islands requires music that evokes a specific emotional reaction in the player.

Over the course of the projects, I will build up a library of sound effects based on the sound design for each game. Some of these may be generated using synths or tools such as SFXR, others will be Foley and general effects recorded at my home studio or sourced from various free sound effects libraries.

Secondary Goals

Story treatments

Subject to the desires of the core teams, I will be assisting with the development of narrative contexts for the Lantern and Islands projects. If this goes ahead, I will be writing story treatments for both games that form part of the overall design and inform the use of the gameplay mechanics involved.

Design Documentation

This is less of an individual focus and more of an element of the overall group design work. I am including it here because I have a specific interest in synthesising design ideas into a clear written format that effectively communicates the core concept of a project. I would like to use my writing abilities to help teams bring their ideas together into a cohesive whole that can be used for reference during development.

I will put my scheduling goals in a separate post, so that I don't get tl;dr problems when I need to refer back to them.